Welcome to a Team that Values You

At DataToBiz, we value the unique talents and fresh ideas everyone brings, especially our new team members. You were chosen from many applicants because we believe in the great things you’ll achieve with us. DataToBiz is evolving every day, and we’re excited to have you on this journey. We hope you’ll feel at home here!

The Story Behind Biz

DataToBiz was co-founded in 2018 by Ankush Sharma and Parindsheel Singh Dhillon to bring their expertise in Data Science beyond the daily workplace. Since then, DataToBiz has been fueled by a passion for purpose, problem-solving, and the dedication of our high-performing team members who have elevated the organization every day. Over the past four years, we’ve faced and overcome many challenges, emerging stronger with resilience and perseverance.

Our vision is twofold: to become a leading global Data Analytics organization, providing innovative solutions for some of the most pressing needs across industries, and to foster a compassionate, human-centered culture. We aim to create a workplace where our teammates are encouraged to step out of their comfort zones, enjoy a vibrant, fun-loving environment, and make a significant impact through their work.

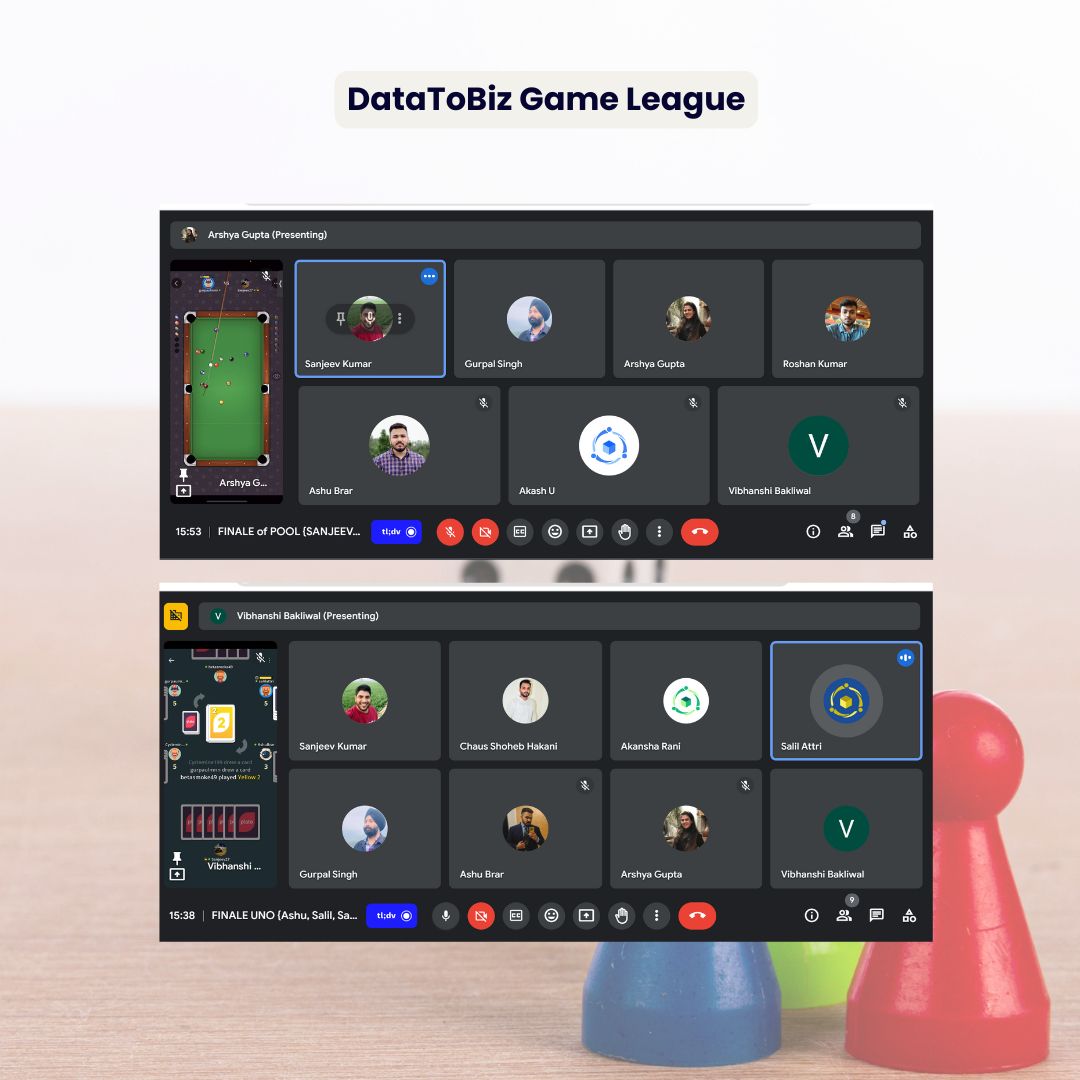

Core Values at Biz

Authenticity

Honesty

Idea Sharing

Fun Loving

Problem Solvers

Together, We Build a Better Workplace

We’re dedicated to creating a workplace where everyone feels respected, supported, and valued. Here’s how we uphold this commitment:

Respect as a Foundation

We’re committed to a culture of mutual respect and understanding for everyone on our team.

Support When Needed

A trusted team is here to listen and provide fair, caring assistance whenever concerns arise.

Privacy and Care

Any personal matters shared are treated with the utmost dignity and confidentiality.

Growing Awareness Together

Regular sessions help us all stay informed and mindful about fostering a respectful workplace.

A Shared Responsibility

Every team member plays a role in building and maintaining this safe and positive environment for all.

POSH at DataToBiz - More Than Policy, a Practice

We are committed to creating a workplace where people feel safe, respected, and genuinely supported. More than thought that counts, we have established Prevention of Sexual Harassment (POSH) Act processes in place to address concerns with care, confidentiality, and fairness. Beyond compliance, we believe this is our shared responsibility to nurture a culture built on trust, dignity, and mutual respect.

What this means in practice?

- A POSH-compliant Internal Committee to address concerns objectively and sensitively

- Anonymous reporting and respectful handling of all complaints

- Regular awareness workshop and communications to foster a safe and inclusive work environment

- Zero tolerance for harassment, bias, or inappropriate conduct

Living By Our Values, Empowering Our People

Fun Workplace

- Flat Job Hierarchy

- Flexible Work Hours

- Casual Dress Code

- Disciplined Workplace Environment

Learning & Development

- Team Cohesion

- Task Autonomy

- Smooth Reporting

- Extensive Training

HR Policies

- Satisfactory Job Pay

- POSH Act for inclusivity

- Flexible Leave Policy

- Monthly Paid Leaves

- Bonus & Incentives

Motivational

- Team Appreciation

- Individual Awards

- Performance Rewards

- Salary Appraisals

Together, let’s make data-driven change that matters!

Drop us a line at

careers@datatobiz.com

Looking for Internship?

internships@datatobiz.com

For Full-Time Role

ft-careers@datatobiz.com

For Contract/Consulting Role

contract-careers@datatobiz.com

Join Us & Make an Impact with Data

Looking for Internship?

For Full-Time Role

For Contract/Consulting Role

Current opening In DataToBiz team

PR Intern

Position: Public Relation Intern

We are seeking a Public Relations Intern, who is full of creative ideas and eager to contribute on a large scale. The intern will gain visibility into the inner workings and aspects of the public relations field, provide concrete deliverables and learn from top to bottom. When the internship is completed you will be ready to enter any fast paced PR firm and/or PR role in any corporate firm.

Responsibilities

Create company’s PR strategy and execute it in different phases

Create and curate engaging content

Communicate and build relationships with current or prospect clients

Create and distribute press releases

Build and update media lists and databases

Schedule, coordinate and attend various events

Perform research and market analysis activities

Requirements

Strong desire to learn along with professional drive

Excellent verbal and written communication skills

Efficiency at MS Office

Passion for the PR industry and its best practices

Current enrolled/graduated in a related BS or Masters university/college

Perks

Competitive Stipend

Start-up working culture experience

5 Days a week working schedule

Working with a high energetic & diverse team having great ideas and experience

Platform to implement theoretical studies ideas and co-ordinate with core founding team

Opportunity to keep abreast with the latest trends in technologies

Sr Power BI Developer

The person will be required to:

- Drive the development and analysis of data, reporting automation, Dash boarding, and business intelligence programs.

- Ability to manage high volume and high complexity projects across multiple delivery location in virtual environment with excellent client communication skills

- Highly competent technically with significant, hands-on expertise in technical architecture and delivery of data visualization and Analytics solution

- Conduct business due-diligence activities to identify analytics led opportunities for transforming business

- Develop a deep understanding of systems and processes in order to extract insights from existing data leveraging rigorous project management discipline to ensure the highest possible quality of delivery while managing team capacity

Required Skills and Experience

- Minimum 4 years of experience in building & optimizing PowerBI dashboards

- Must be able to integrate Power BI reports into other applications using embedded analytics like Power BI service (SaaS), or by API automation.

- Should be able to write Power BI DAX queries, functions and formula.

- Advanced working SQL knowledge and experience working with relational databases, query authoring (SQL) as well as working familiarity with a variety of databases.

- Able to implement row level security on data and have an understanding of application security layer models in Power BI.

- Have been end to end implemented the BI & Reporting solution

- Hands on experience in Rest API Development

- Adept in developing, publishing and scheduling Power BI reports as per the business requirements.

- Should have prior experience in creating drill down reports charts.

- Deployment knowledge to external servers outside Power BI.

- Proficiency with DAX expressions and M language.

- Should be capable of managing PowerBI User account

- Working with PowerBI Premium would be advantageous

- Should have knowledge and skills for secondary tools such as Microsoft Azure, SQL data warehouse, PolyBase, Visual Studio, etc.

Deep Learning Engineer

Key responsibilities:

- Solving complex data problems using deep learning techniques in computer vision OR Natural Language processing.

- Deliver production-ready models to deploy in the production system.

- Meet project requirements and timelines.

- Identify success metrics and monitor them to ensure high-quality output for the client.

- Share ideas and learning with the team.

Key Skills:

- 2+ years working experience with Python programming(Mandatory)

- Applied experience with Deep Learning algorithms such as Convolutional Neural Networks, Recurrent Neural Networks etc

- Machine learning expertise with exposure to one or more frameworks like Tensorflow, Keras, pytorch, Caffe, MXNet etc (Mandatory)

- Minimum 2 year’s experience in NLP/Computer vision domain to succeed in this role (Mandatory)

- Exposure to DL Model optimization and transfer learning techniques

- Exposure to SOTA algorithms in computer vision &/or Natural language processing like faster RCNN,YOLO, GPT, BERT etc for respective domain tasks e.g. Object detection, tracking, classification, text summarization etc

- Strong verbal and written communication skills applied in communications with the team and client(s)

Data Engineer

Experience Required – 2-5 years

Skillset –

- Experience with Python, PySpark, Scala, Spark to write data pipelines and data processing layers.

- Strong SQL skills and proven experience in working with large datasets and relational databases.

- Experience with Data Governance (Data Quality, Metadata Management, Security, etc.)

- Understanding of big data file formats like JSON, Avro, Parquet and other prevalent big data file formats

- Understanding Data Warehouse design principles (Tabular, MD).

- Minimum 1 years of experience in any cloud (AWS/GCP/Azure) platform.

- Experience in working with big data tools/environment to automate production pipelines.

- Experience with data visual analysis and BI tools (Matplotliband Power BI)

Responsibilities –

- As a Data Engineer in our team, you should have a proven ability to deliver high quality work on time and with minimal supervision.

- Developing new data pipelines and ETL jobs for processing millions of records and it should be scalable with growth.

- Pipelines should be optimised to handle both real time data, batch update data and historical data.

- Establish scalable, efficient, automated processes for complex, large scale data analysis.

- Write high quality code to gather and manage large data sets (both real time and batch data) from multiple sources, perform ETL and store it in a data warehouse/data lake

- Manipulate and analyse complex, high-volume, high-dimensional data from varying sources using a variety of tools and data analysis techniques.

- Participate in data pipelines health monitoring and performance optimisations as well as quality documentation.

- Adapt best practices and industry standards, ensuring top quality deliverables and playing an integral role in cross-functional system integration.

- Data integration using enterprise development tool-sets (e.g. ETL, MDM, Quality, CDC, Data Masking, Quality).

- Design and implement formal data warehouse testing strategies and plans including unit testing, functional testing, integration testing, performance testing, and validation testing.

- Interact with end users/clients and translate business language into technical requirem

Lead Talent Acquisition

Key responsibilities:

- Design and develop a recruitment strategy and processes to support the overall business strategy. Updating current and designing new recruiting procedures.

- Coordinating with respective stakeholders to identify requisition needs & determine selection criteria.

- Effectively create job descriptions and job evaluations to identify the key KRAs and KPIs across roles.

- Researching and choosing job advertisement options.

- Develop key recruitment metrics and work to reduce the overall TAT. Keeping track of recruiting metrics (e.g. time-to-fill and cost-per-hire)

- Responsible for mapping external talent pool to critical positions within the organization and creating a healthy pipeline.

- Implementing new sourcing methods (e.g. Social platforms, professional networks, and Boolean searches).

- Design an assessment framework to support the recruitment process while already leveraging on conventional methods.

- Screening applicants for competency with the job requirements.

- Arranging telephone, video, or in-person interviews.

- Offering job positions and completing the relevant paperwork.

- Keeping track of all applicants as well as keeping applicants informed on the application process.

- Designing and leading the employer branding strategy. Recommending ways to improve employer brand

- Ability to work in a fast-paced environment and consistently meet internal and external deadlines.

Key experience & skills:

- 2-5 years of IT recruitment experience is mandatory

- Experience in Data Science recruitment would be preferrable

- Strong analytical and problem-solving skills.

- Must have strong exposure in designing and implementing recruitment processes from scratch. Must have time management and negotiation skills.

- Strong understanding of the Indian IT talent market and talent availability.

- Proven experience in hiring in Niche technologies as per market trends.

- Proven work experience as a Lead Talent Acquisition, Recruitment Consultant or Recruiting Coordinator.

- Must have hands experience of working and handling ATS

- Experience in recruitment processes and platforms e.g. Naukri, Zoho etc

- A good understanding of technology and technical skills.

- Good interpersonal and decision-making skills.

- Strong sales & negotiation skills

Business Development Manager

Required Experience, Skills and Qualifications:

• Proven Service Selling in B2B IT domain.

• Past experience of International Sales, majorly including selling custom services solutions.

• Ability to develop good relationships with current and potential clients.

• Strong research skillsets required before client interaction.

• Strong Persuasion and Influencing Skills

• Excellent Negotiation Skills.

• Excellent leadership and communication skills.

• Knowledge of productivity tools and software.

• High attention to detail and a focus on fact-based decision making.

• IT Solution/ Solution Selling /Mobility/Pre-Sales/Outbound(Software sales)/ Business Development/Key Account Management exposure/experience.

• Analytics sales experience would be an add-on advantage.

Responsibilities and Duties:

• Oversee the complete sales process from Inbound, Outbound to Inside sales to attract new clients (both Indian and International) and drive the revenues of the organisations.

• Qualifying new inbound leads and moving them through the sales pipeline.

• Generate new outbound leads from LinkedIn and similar platforms and move them through sales pipelines.

• Research, identity, and prospect for new target companies within multiple niche industries.

• Develop and execute a strong prospecting plan of action, including lead management strategies and communication strategies for better conversions. It includes understanding the client requirements, creating BRDs, coming up with the solution in association with the tech team and the submit the required proposals.

• Talk to influencers and decision-makers involved in procuring IT/ software development services

• Coordinate with Technical team to schedule pre-sales discussion, ensure smooth communication between prospect, sales team

• Full sales life-cycle expertise, Handling the Up-level lead generation

• Setting up online demo meeting and face to face meetings

• Regular follow-ups with the prospects that have been identified as potential clients and who have shown interest to use our services

• Serve as the first in-depth point of contact to customers

• Provide product/service information to prospective customers

• Upselling the services to existing clients with prospect nurturing.

• Maintain fruitful relationships with clients and address their needs effectively.

QA / Software Tester

Responsibilities and Duties:

● Regression testing of most recent builds

● Thorough understanding of Software Development Life Cycle and Quality methodologies

● Strong in Web Services Automation

● Proficient in front-end, backend, system testing.

● Experience testing large scale complex products with software and infrastructure

components.

● Develop and update/extend backend integration / system / performance / etc. automated

test frameworks

● Building highly scalable automation frameworks for web, mobile, system wide tests.

● Reviewing pull requests (by Dev’s and SDET’s), reviewing for testability and providing

feedback on Engineering Design Docs and writing Test-specific documentation

● Authoring comprehensive test plans and test strategies that span across software, and

infrastructure components and ensure high quality deliverables.

● Write test plans, test strategy and test cases (automated)

● Troubleshoot critical defects in software coding

● Review and analyse system specifications

● Execute test cases and analyse results

● Evaluate product code according to specifications

● Create logs to document testing phases and defects

● Help troubleshoot issues

● Conduct post-release/ post-implementation testing

● Work with cross-functional teams to ensure quality throughout the software development lifecycle.

Required Experience, Skills and Qualifications:

● 2-5 years of proven experience in owning QA – Manual and Automation streams

● 5 years of Unix/Linux experience (shell/tools/kernel/networking)

● Proven experience with automation tools and frameworks such as Cucumber, Selenium,

● TestNG, Postman, Selenium and meter

● Experience integrating the automation suite with CI/CD pipelines such as Jenkins.

● Demonstrated ability in creating automation frameworks at scale

● Lead QA efforts in individual capacity along manual and automation streams

● Expert at Statistical analysis and failure pattern identification

● Grey and black box testing methodologies.

Senior Business Intelligence Developer | Bi | ADF

This is a unique opportunity to be involved in delivering leading-edge business analytics using the latest and greatest cutting-edge BI tools, such as cloud-based databases, self-service analytics and leading visualisation tools enabling the company’s aim to become a fully digital organisation

Key Accountabilities

• Development and creation of high-quality analysis and reporting solutions

• Development of ETL routines using SSIS and ADF into Azure SQL Datawarehouse

• Integration of global applications such as D365,Salesforce and Workday into cloud data warehouse.

• Experience using D365 ERP systems and creating BI reports.

• Development of SQL procedures and SQL views to support data load as well as data visualisation

• Development of data mapping tables and routines to join data from multiple different data sources

• Performance tuning SQL queries to speed up data loading time and query execution.

• Liaising with business stakeholders, gathering requirements and delivering appropriate technical solutions to meet the business needs.

Professional Skills

• Strong communications skills and ability to turn business requirements into technical solutions

• Experience in developing data lakes and data warehouses using Microsoft Azure

• Demonstrable experience designing high-quality dashboards using Tableau and Power BI

• Strong database design skills, including an understanding of both normalised form and dimensional form databases.

• In-depth knowledge and experience of data-warehousing strategies and techniques e.g. Kimble Data warehousing

• Experience in Azure Analysis Services and DAX (Data Analysis Expressions)

• Experience in Cloud based data integration tools like Azure Data Factory or Snaplogic

• Experience in Azure Dev Ops is a plus

• Familiarity with agile development techniques and objectives

Key Experiences

• Excellent communication skills

• Over 5 years’ experience in a Business Intelligence Analyst or Developer roles

• Over 4 years’ experience using Microsoft Azure for data warehousing

• Experience in designing and performance tuning data warehouses and data lakes.

• 4+ years extensive experience in developing data models and dashboards using Tableau/Power BI within an IT department

• Self-starter who can manage own direction and projects

• Being delivery-focused with a can-do attitude in a sometimes-challenging environment is essential.

• Experience using Tableau to visualise data held in SQL Server

• Experience working with finance data highly desirable

Data Engineer | GCP Cloud | Python | ETL

This is a hands-on, entrepreneurial-focused position. This key engineering role is expected to design, develop, deliver, and support data solutions and collaborate with other key team members in Design, Engineering, Product Management, and various Customer and Stakeholder roles.

You must be comfortable and effective as an engineering team player in our dynamic and fastpaced environment. Our culture encourages experimentation and favours agile and rapid iteration over the pursuit of immediate perfection. We are flexible and supportive of remote work arrangements.

Specific responsibilities:

● Work as part of an implementation team from concept to operations, providing deep technical subject matter expertise for successful deployment.

● Ingest and integrate massive datasets from multiple data sources, while designing and developing solutions for data integration, data modeling, and data inference and insights.

● Design, monitor, and improve development, test, and production infrastructure and automation for data pipelines and data stores.

● Troubleshoot and performance tune data pipelines and processes for data ingestion, merging, and integration across multiple technologies and architectures including ETL, ELT, API, and SQL.

● Test and compare competing solutions and provide informed POV on the best solutions for data ingestion, transformation, storage, retrieval, and insights.

● Work well within the quality and code standards, and engineering practices, in place, and maintained by the team.

Experience and Skills:

● 3-5 years of data engineering experience required, 3+ years of Google Cloud Platform (GCP) experience desired. Equivalent cloud platform experience considered.

● 2 + years of coding in Python.

● 2+ years of experience working with JSON, SQL, document data stores, and relational databases.

● Solid understanding of ETL/ELT concepts, data architectures, data modelling, data manipulation languages and techniques, and data query languages and

techniques.

● Experience working in GCP-based Big Data deployments (Batch/Real-Time) leveraging Big Query, Big Table, Google Cloud Storage, PubSub, Data Fusion, Dataflow, Dataproc, Airflow, etc.

● In-depth understanding of Google’s data product technology and underlying data architectures. Equivalent cloud platform experience considered.

![First_Page_(2)[1]](https://careers.datatobiz.com/wp-content/uploads/2024/08/First_Page_21.jpg)

![First_Page[1]](https://careers.datatobiz.com/wp-content/uploads/2024/08/First_Page1.jpg)